Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Exam Dumps - Databricks Certified Associate Developer for Apache Spark 3.5 – Python

Searching for workable clues to ace the Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

What is the behavior for function date_sub(start, days) if a negative value is passed into the days parameter?

A developer notices that all the post-shuffle partitions in a dataset are smaller than the value set for spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold.

Which type of join will Adaptive Query Execution (AQE) choose in this case?

42 of 55.

A developer needs to write the output of a complex chain of Spark transformations to a Parquet table called events.liveLatest.

Consumers of this table query it frequently with filters on both year and month of the event_ts column (a timestamp).

The current code:

from pyspark.sql import functions as F

final = df.withColumn("event_year", F.year("event_ts")) \

.withColumn("event_month", F.month("event_ts")) \

.bucketBy(42, ["event_year", "event_month"]) \

.saveAsTable("events.liveLatest")

However, consumers report poor query performance.

Which change will enable efficient querying by year and month?

22 of 55.

A Spark application needs to read multiple Parquet files from a directory where the files have differing but compatible schemas.

The data engineer wants to create a DataFrame that includes all columns from all files.

Which code should the data engineer use to read the Parquet files and include all columns using Apache Spark?

A Spark engineer is troubleshooting a Spark application that has been encountering out-of-memory errors during execution. By reviewing the Spark driver logs, the engineer notices multiple "GC overhead limit exceeded" messages.

Which action should the engineer take to resolve this issue?

17 of 55.

A data engineer has noticed that upgrading the Spark version in their applications from Spark 3.0 to Spark 3.5 has improved the runtime of some scheduled Spark applications.

Looking further, the data engineer realizes that Adaptive Query Execution (AQE) is now enabled.

Which operation should AQE be implementing to automatically improve the Spark application performance?

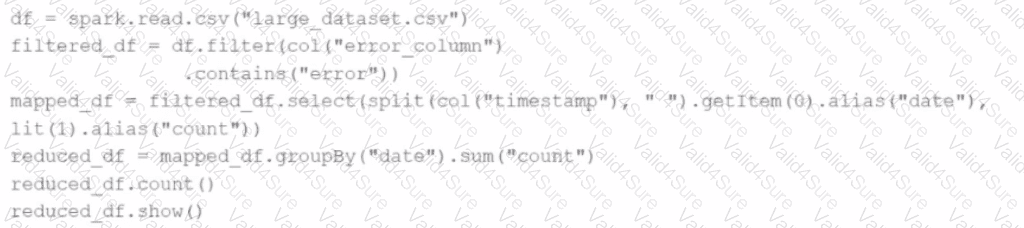

Given the code:

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"), lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?

A data engineer is reviewing a Spark application that applies several transformations to a DataFrame but notices that the job does not start executing immediately.

Which two characteristics of Apache Spark's execution model explain this behavior?

Choose 2 answers: