Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Exam Dumps - Databricks Certified Associate Developer for Apache Spark 3.5 – Python

Searching for workable clues to ace the Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

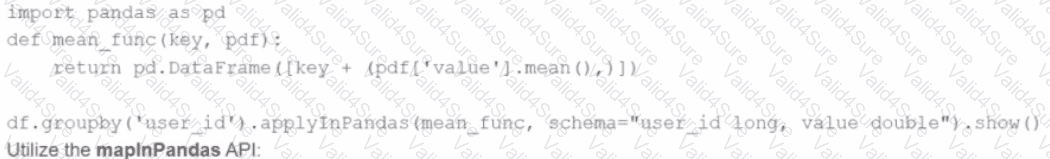

A developer is working with a pandas DataFrame containing user behavior data from a web application.

Which approach should be used for executing a groupBy operation in parallel across all workers in Apache Spark 3.5?

A)

Use the applylnPandas API

B)

C)

D)

39 of 55.

A Spark developer is developing a Spark application to monitor task performance across a cluster.

One requirement is to track the maximum processing time for tasks on each worker node and consolidate this information on the driver for further analysis.

Which technique should the developer use?

A data engineer has been asked to produce a Parquet table which is overwritten every day with the latest data. The downstream consumer of this Parquet table has a hard requirement that the data in this table is produced with all records sorted by the market_time field.

Which line of Spark code will produce a Parquet table that meets these requirements?

28 of 55.

A data analyst builds a Spark application to analyze finance data and performs the following operations:

filter, select, groupBy, and coalesce.

Which operation results in a shuffle?

45 of 55.

Which feature of Spark Connect should be considered when designing an application that plans to enable remote interaction with a Spark cluster?

A data scientist is working with a Spark DataFrame called customerDF that contains customer information. The DataFrame has a column named email with customer email addresses. The data scientist needs to split this column into username and domain parts.

Which code snippet splits the email column into username and domain columns?

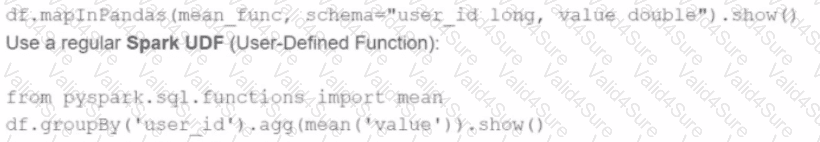

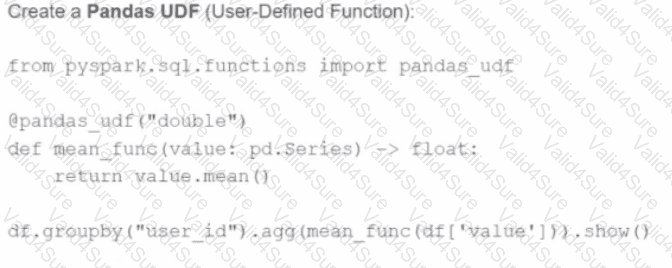

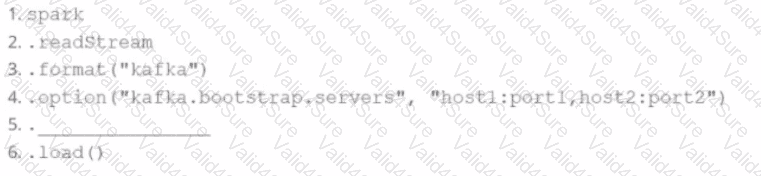

A data engineer wants to create a Streaming DataFrame that reads from a Kafka topic called feed.

Which code fragment should be inserted in line 5 to meet the requirement?

Code context:

spark \

.readStream \

.format("kafka") \

.option("kafka.bootstrap.servers", "host1:port1,host2:port2") \

.[LINE 5] \

.load()

Options:

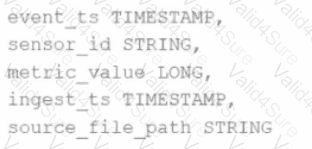

Given the schema:

event_ts TIMESTAMP,

sensor_id STRING,

metric_value LONG,

ingest_ts TIMESTAMP,

source_file_path STRING

The goal is to deduplicate based on: event_ts, sensor_id, and metric_value.

Options: