Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Exam Dumps - Databricks Certified Associate Developer for Apache Spark 3.5 – Python

Searching for workable clues to ace the Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

A data engineer is asked to build an ingestion pipeline for a set of Parquet files delivered by an upstream team on a nightly basis. The data is stored in a directory structure with a base path of "/path/events/data". The upstream team drops daily data into the underlying subdirectories following the convention year/month/day.

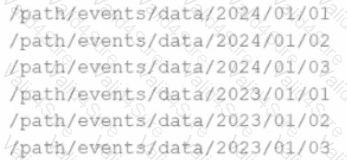

A few examples of the directory structure are:

Which of the following code snippets will read all the data within the directory structure?

40 of 55.

A developer wants to refactor older Spark code to take advantage of built-in functions introduced in Spark 3.5.

The original code:

from pyspark.sql import functions as F

min_price = 110.50

result_df = prices_df.filter(F.col("price") > min_price).agg(F.count("*"))

Which code block should the developer use to refactor the code?

43 of 55.

An organization has been running a Spark application in production and is considering disabling the Spark History Server to reduce resource usage.

What will be the impact of disabling the Spark History Server in production?

49 of 55.

In the code block below, aggDF contains aggregations on a streaming DataFrame:

aggDF.writeStream \

.format("console") \

.outputMode("???") \

.start()

Which output mode at line 3 ensures that the entire result table is written to the console during each trigger execution?

An engineer has two DataFrames: df1 (small) and df2 (large). A broadcast join is used:

python

CopyEdit

from pyspark.sql.functions import broadcast

result = df2.join(broadcast(df1), on='id', how='inner')

What is the purpose of using broadcast() in this scenario?

Options:

Which configuration can be enabled to optimize the conversion between Pandas and PySpark DataFrames using Apache Arrow?

A Spark application suffers from too many small tasks due to excessive partitioning. How can this be fixed without a full shuffle?

Options:

13 of 55.

A developer needs to produce a Python dictionary using data stored in a small Parquet table, which looks like this:

region_id

region_name

10

North

12

East

14

West

The resulting Python dictionary must contain a mapping of region_id to region_name, containing the smallest 3 region_id values.

Which code fragment meets the requirements?