ARA-C01 Exam Dumps - SnowPro Advanced: Architect Certification Exam

Searching for workable clues to ace the Snowflake ARA-C01 Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s ARA-C01 PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

A retail company has 2000+ stores spread across the country. Store Managers report that they are having trouble running key reports related to inventory management, sales targets, payroll, and staffing during business hours. The Managers report that performance is poor and time-outs occur frequently.

Currently all reports share the same Snowflake virtual warehouse.

How should this situation be addressed? (Select TWO).

The following statements have been executed successfully:

USE ROLE SYSADMIN;

CREATE OR REPLACE DATABASE DEV_TEST_DB;

CREATE OR REPLACE SCHEMA DEV_TEST_DB.SCHTEST WITH MANAGED ACCESS;

GRANT USAGE ON DATABASE DEV_TEST_DB TO ROLE DEV_PROJ_OWN;

GRANT USAGE ON SCHEMA DEV_TEST_DB.SCHTEST TO ROLE DEV_PROJ_OWN;

GRANT USAGE ON DATABASE DEV_TEST_DB TO ROLE ANALYST_PROJ;

GRANT USAGE ON SCHEMA DEV_TEST_DB.SCHTEST TO ROLE ANALYST_PROJ;

GRANT CREATE TABLE ON SCHEMA DEV_TEST_DB.SCHTEST TO ROLE DEV_PROJ_OWN;

USE ROLE DEV_PROJ_OWN;

CREATE OR REPLACE TABLE DEV_TEST_DB.SCHTEST.CURRENCY (

COUNTRY VARCHAR(255),

CURRENCY_NAME VARCHAR(255),

ISO_CURRENCY_CODE VARCHAR(15),

CURRENCY_CD NUMBER(38,0),

MINOR_UNIT VARCHAR(255),

WITHDRAWAL_DATE VARCHAR(255)

);

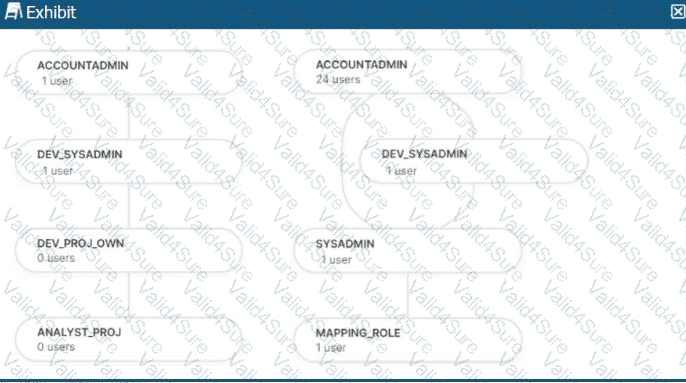

The role hierarchy is as follows (simplified from the diagram):

ACCOUNTADMIN└─ DEV_SYSADMIN└─ DEV_PROJ_OWN└─ ANALYST_PROJ

Separately:

ACCOUNTADMIN└─ SYSADMIN└─ MAPPING_ROLE

Which statements will return the records from the table

DEV_TEST_DB.SCHTEST.CURRENCY? (Select TWO)

In a managed access schema, what are characteristics of the roles that can manage object privileges? (Select TWO).

A user named USER_01 needs access to create a materialized view on a schema EDW. STG_SCHEMA. How can this access be provided?

A Developer is having a performance issue with a Snowflake query. The query receives up to 10 different values for one parameter and then performs an aggregation over the majority of a fact table. It then

joins against a smaller dimension table. This parameter value is selected by the different query users when they execute it during business hours. Both the fact and dimension tables are loaded with new data in an overnight import process.

On a Small or Medium-sized virtual warehouse, the query performs slowly. Performance is acceptable on a size Large or bigger warehouse. However, there is no budget to increase costs. The Developer

needs a recommendation that does not increase compute costs to run this query.

What should the Architect recommend?