Snowflake external tables support partitioning using the PARTITION BY clause, which allows architects to define logical partitions based on expressions derived from file paths or metadata. This approach is flexible and scalable, making it well-suited for data lakes that grow over time and may require changes to partition logic (Answer A).

Using PARTITION BY enables Snowflake to automatically manage partitions as new data arrives, without requiring manual intervention or table recreation. This aligns with Snowflake best practices for external table design and minimizes operational overhead.

The other options are invalid or unsupported in Snowflake. There is no partition_type = USER_SPECIFIED option, no METADATA$EXTERNAL_TABLE_PARTITION = MANUAL setting, and no ADD_PARTITION_COLUMN command for external tables. For the SnowPro Architect exam, understanding the correct and supported syntax for partitioned external tables is essential for designing maintainable data lake architectures.

=========

QUESTION NO: 62 [Snowflake Ecosystem and Integrations]

A group of marketing and advertising companies want to share data with one another for joint analysis while maintaining strict privacy controls.

How should an Architect design the data sharing solution?

A. Use Data Clean Rooms for privacy-preserving collaboration.

B. Use Secure Data Sharing with standard shares.

C. Use Snowflake Marketplace listings.

D. Use a Data Exchange as a shared data hub.

Answer: A

Snowflake Data Clean Rooms are specifically designed for privacy-preserving collaboration between organizations. They allow multiple parties to perform joint analytics on combined datasets without exposing raw underlying data to one another (Answer A). This makes them ideal for marketing and advertising use cases where sensitive customer data must remain private while still enabling insights such as audience overlap and campaign effectiveness.

Secure Data Sharing and Data Exchanges allow data access but do not inherently prevent participants from seeing raw data. Snowflake Marketplace is designed for broad data distribution rather than tightly controlled, privacy-centric collaboration.

For SnowPro Architect candidates, this question emphasizes selecting the right Snowflake-native collaboration mechanism based on privacy and governance requirements.

=========

QUESTION NO: 63 [Snowflake Data Engineering]

An Architect wants to build an automated ETL pipeline that reads data from an external stage using an external table, performs transformations on changed data, joins with dimension tables, and loads results into a target table.

What should the Architect use?

A. Streams and tasks

B. Dynamic tables

C. Materialized views

D. Snowpipe with auto-ingest

Answer: A

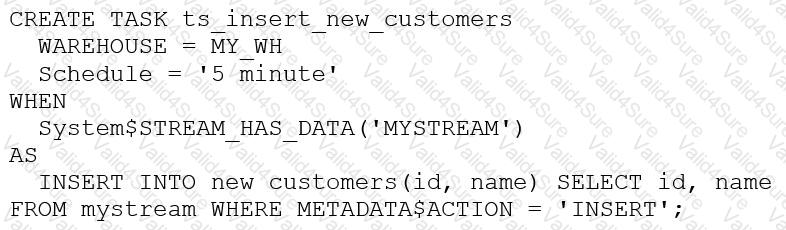

Streams and tasks provide Snowflake’s native framework for building automated, incremental ETL pipelines. A stream can track changes in the external table (or staging table), while tasks orchestrate the execution of transformation logic and loading into target tables (Answer A).

This approach supports complex transformations, joins with dimension tables, and controlled scheduling or event-driven execution. Materialized views cannot perform joins with arbitrary tables and are not suitable for complex ETL. Dynamic tables simplify transformations but are not designed to consume change data directly from external tables. Snowpipe focuses on ingestion only and does not support downstream transformations.

SnowPro Architect exams frequently test understanding of when to use streams and tasks versus newer abstractions like dynamic tables.

=========

QUESTION NO: 64 [Security and Access Management]

A data share exists between a provider and a consumer account. Five tables are already shared, and the consumer role has been granted IMPORTED PRIVILEGES.

What happens if a new table is added to the provider schema?

A. The consumer automatically sees the new table.

B. The consumer sees the table after granting IMPORTED PRIVILEGES again on the consumer side.

C. The consumer sees the table only after SELECT is granted on the new table to the share on the provider side.

D. The consumer sees the table after USAGE is granted on the database and schema and SELECT is granted on the table to the consumer database.

Answer: C

In Snowflake Secure Data Sharing, adding a new table to an existing schema does not automatically make it visible to consumers. The provider must explicitly grant SELECT on the new table to the share (Answer C). This ensures intentional and controlled data exposure.

Once the grant is applied on the provider side, consumer roles that already have IMPORTED PRIVILEGES will automatically see the new table without requiring additional grants on the consumer account. This separation of responsibilities is a core Snowflake governance principle.

This question reinforces SnowPro Architect knowledge of provider-versus-consumer responsibilities in Secure Data Sharing.

=========

QUESTION NO: 65 [Snowflake Data Engineering]

Which technique efficiently ingests and consumes semi-structured data for Snowflake data lake workloads?

A. IDEF1X

B. Schema-on-write

C. Schema-on-read

D. Information schema

Answer: C

Schema-on-read is a fundamental pattern for Snowflake data lake workloads involving semi-structured data such as JSON, Avro, or Parquet (Answer C). Data is ingested in its raw form and interpreted at query time using Snowflake’s VARIANT type and functions like FLATTEN.

This approach provides flexibility as data structures evolve, reduces ingestion complexity, and avoids the need to predefine rigid schemas. Schema-on-write is more appropriate for structured data warehouses but is less efficient for rapidly changing semi-structured data. IDEF1X is a data modeling notation, not an ingestion technique. Information schema is a metadata repository.

For SnowPro Architect candidates, understanding schema-on-read is critical when designing scalable, flexible data lake architectures in Snowflake.