Databricks-Machine-Learning-Professional Exam Dumps - Databricks Certified Machine Learning Professional

Searching for workable clues to ace the Databricks Databricks-Machine-Learning-Professional Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s Databricks-Machine-Learning-Professional PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

A data scientist has developed a scikit-learn random forest model model, but they have not yet logged model with MLflow. They want to obtain the input schema and the output schema of the model so they can document what type of data is expected as input.

Which of the following MLflow operations can be used to perform this task?

A data scientist is utilizing MLflow to track their machine learning experiments. After completing a series of runs for the experiment with experiment ID exp_id, the data scientist wants to programmatically work with the experiment run data in a Spark DataFrame. They have an active MLflow Client client and an active Spark session spark.

Which of the following lines of code can be used to obtain run-level results for exp_id in a Spark DataFrame?

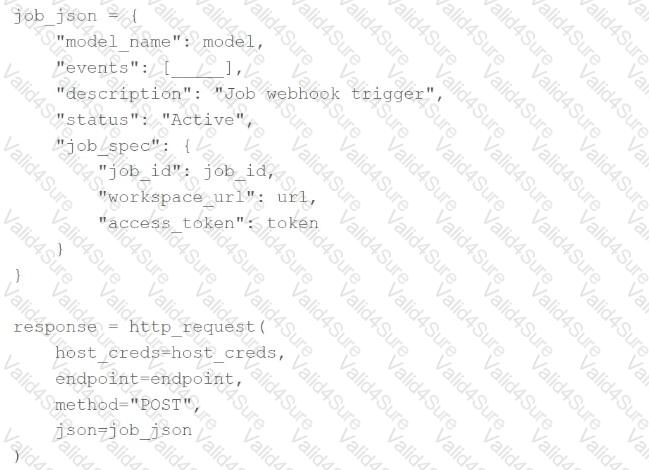

A machine learning engineer is attempting to create a webhook that will trigger a Databricks Jobjob_idwhen a model version for modelmodeltransitions into any MLflow Model Registry stage.

They have the following incomplete code block:

Which of the following lines of code can be used to fill in the blank so that the code block accomplishes the task?

A machine learning engineer wants to log and deploy a model as an MLflow pyfunc model. They have custom preprocessing that needs to be completed on feature variables prior to fitting the model or computing predictions using that model. They decide to wrap this preprocessing in a custom model class ModelWithPreprocess, where the preprocessing is performed when calling fit and when calling predict. They then log the fitted model of the ModelWithPreprocess class as a pyfunc model.

Which of the following is a benefit of this approach when loading the logged pyfunc model for downstream deployment?

Which of the following statements describes streaming with Spark as a model deployment strategy?