Databricks-Certified-Data-Engineer-Associate Exam Dumps - Databricks Certified Data Engineer Associate Exam

Searching for workable clues to ace the Databricks Databricks-Certified-Data-Engineer-Associate Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s Databricks-Certified-Data-Engineer-Associate PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

A data engineer only wants to execute the final block of a Python program if the Python variable day_of_week is equal to 1 and the Python variable review_period is True.

Which of the following control flow statements should the data engineer use to begin this conditionally executed code block?

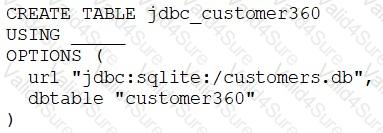

A data engineer needs to create a table in Databricks using data from their organization’s existing SQLite database.

They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

A data engineer is inspecting an ETL pipeline based on a Pyspark job that consistently encounters performance bottlenecks. Based on developer feedback, the data engineer assumes the job is low on compute resources. To pinpoint the issue, the data engineer observes the Spark Ul and finds out the job has a high CPU time vs Task time.

Which course of action should the data engineer take?

Which of the following is hosted completely in the control plane of the classic Databricks architecture?

A new data engineering team team has been assigned to an ELT project. The new data engineering team will need full privileges on the table sales to fully manage the project.

Which of the following commands can be used to grant full permissions on the database to the new data engineering team?

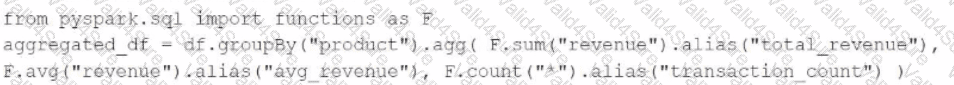

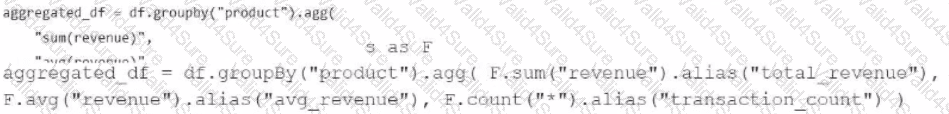

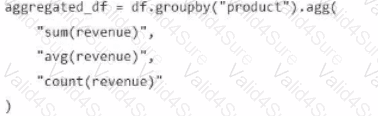

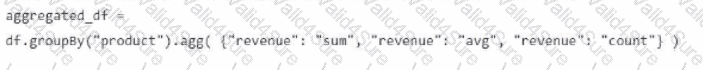

A data engineer has been provided a PySpark DataFrame named df with columns product and revenue. The data engineer needs to compute complex aggregations to determine each product's total revenue, average revenue, and transaction count.

Which code snippet should the data engineer use?

A)

B)

C)

D)

What is the primary function of the Silver layer in the Databricks medallion architecture?

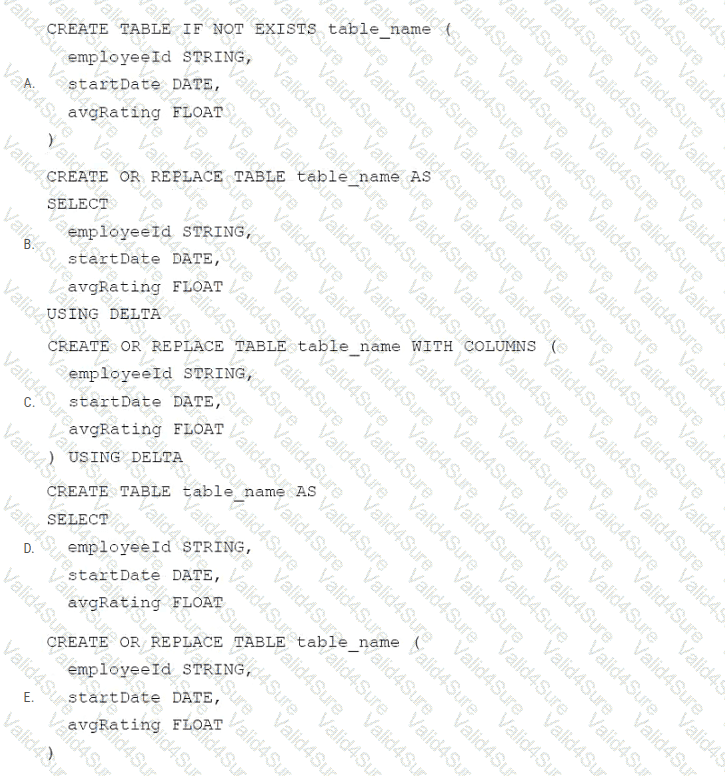

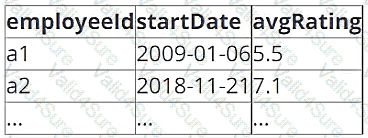

A data architect has determined that a table of the following format is necessary:

Which of the following code blocks uses SQL DDL commands to create an empty Delta table in the above format regardless of whether a table already exists with this name?