DP-700 Exam Dumps - Implementing Data Engineering Solutions Using Microsoft Fabric

Searching for workable clues to ace the Microsoft DP-700 Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s DP-700 PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

You have an Azure subscription that contains a blob storage account named sa1. Sa1 contains two files named Filelxsv and File2.csv.

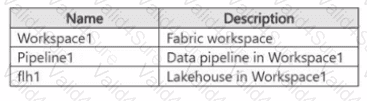

You have a Fabric tenant that contains the items shown in the following table.

You need to configure Pipeline1 to perform the following actions:

• At 2 PM each day, process Filel.csv and load the file into flhl.

• At 5 PM each day. process File2.csv and load the file into flhl.

The solution must minimize development effort. What should you use?

You need to ensure that processes for the bronze and silver layers run in isolation How should you configure the Apache Spark settings?

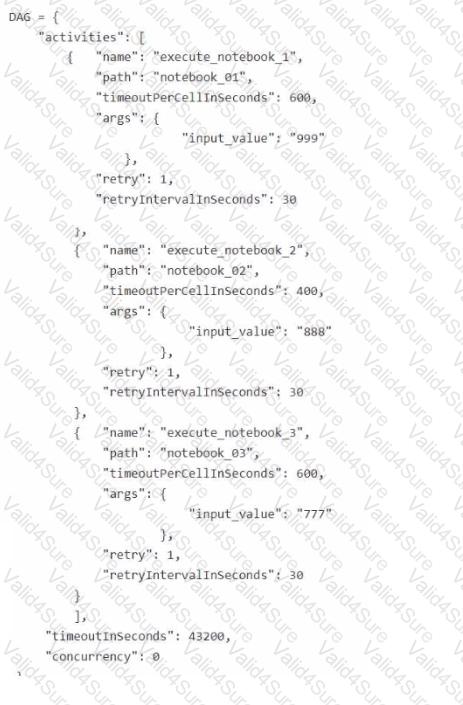

You are building a Fabric notebook named MasterNotebookl in a workspace. MasterNotebookl contains the following code.

You need to ensure that the notebooks are executed in the following sequence:

1. Notebook_03

2. Notebook.Ol

3. Notebook_02

Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

You have a Fabric workspace that contains a lakehouse named Lakehousel.

You plan to create a data pipeline named Pipeline! to ingest data into Lakehousel. You will use a parameter named paraml to pass an external value into Pipeline1!. The paraml parameter has a data type of int

You need to ensure that the pipeline expression returns param1 as an int value.

How should you specify the parameter value?

You need to recommend a solution for handling old files. The solution must meet the technical requirements. What should you include in the recommendation?

You need to recommend a solution to resolve the MAR1 connectivity issues. The solution must minimize development effort. What should you recommend?

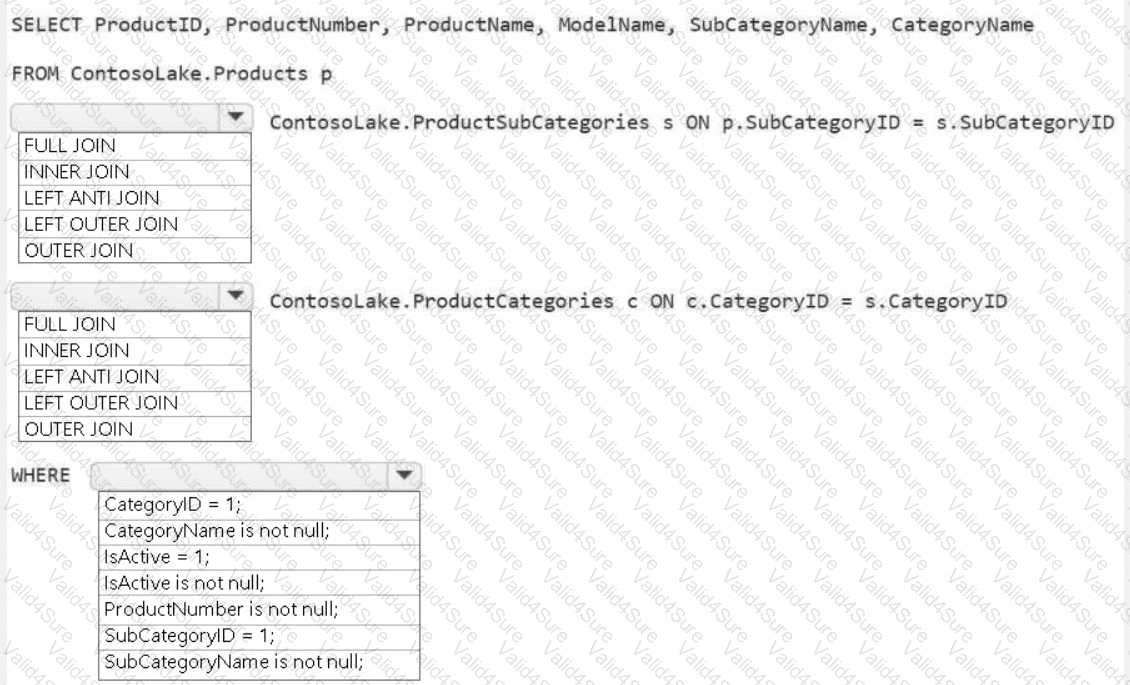

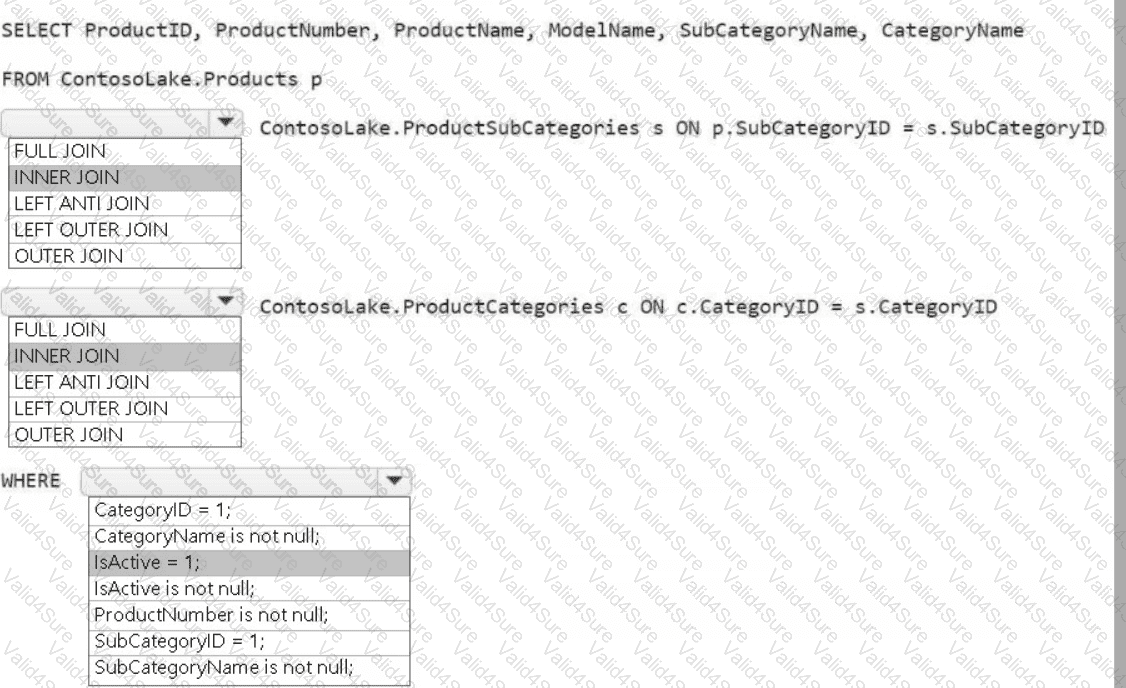

You need to create the product dimension.

How should you complete the Apache Spark SQL code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to schedule the population of the medallion layers to meet the technical requirements.

What should you do?

A screenshot of a computer Description automatically generated

A screenshot of a computer Description automatically generated